Introduction

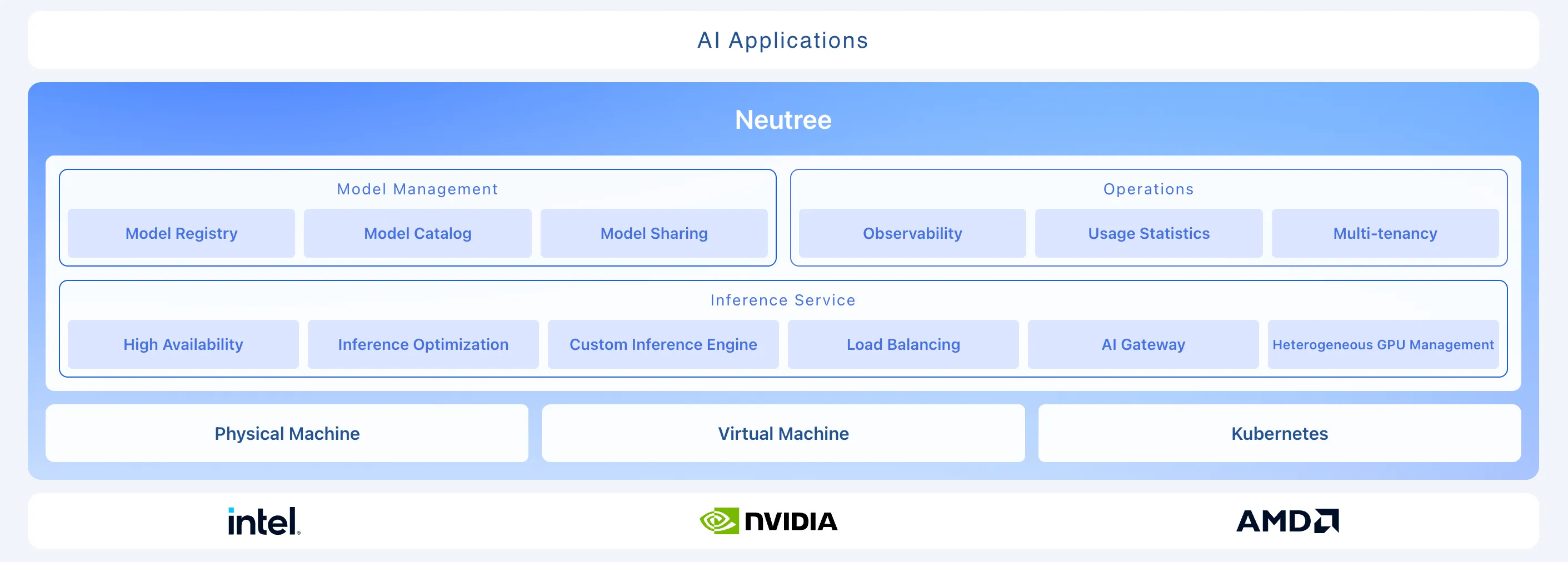

Neutree is an open-source Large Language Model (LLM) infrastructure management platform designed to help users safely and efficiently manage heterogeneous compute resources in local environments, and rapidly deploy and optimize inference services.

Neutree features a flexible and compatible architecture that supports multiple runtime environments and accelerator types. It integrates core capabilities including model management, compute scheduling, inference services, and permission governance, effectively improving the efficiency and stability of AI infrastructure, reducing resource management complexity, and strengthening the controllability and security of data and models.

Neutree provides the following features:

-

Unified and Efficient Compute Resource Pool

-

Supports multiple compute runtime environments, such as virtual machines, bare-metal servers, and Kubernetes clusters.

-

Supports unified management of accelerators from different vendors (such as NVIDIA and AMD) within the platform, enabling unified resource scheduling. Multiple model instances can share the same accelerator through intelligent partitioning and isolation technology, effectively avoiding compute waste and improving overall throughput.

-

-

Convenient and Comprehensive Model Management

-

Supports mainstream model types such as Text-generation, Text-embedding, and Text-rerank, meeting diverse application scenarios.

-

Enables one-click pulling of open-source models from Hugging Face, and also supports uploading private models.

-

Through the model catalog feature, preset inference engines, resource specifications, and runtime parameters enable standardized model deployment and reduce operational costs.

-

-

Flexible and High-Performance Inference Services

Neutree features a KVCache-aware multi-replica load balancing mechanism that intelligently optimizes inference request routing, effectively improving KVCache hit rates, thereby significantly enhancing LLM inference performance and response efficiency to meet high-concurrency scenarios.

-

Comprehensive Multi-Tenant and Permission Management

-

Each tenant has independent resource and model spaces, ensuring business isolation and data security.

-

Supports fine-grained RBAC (role-based access control) covering model management, inference calls, and resource scheduling for unified governance.

-

Provides token usage statistics, enabling access rate limiting, cost control, and billing capabilities.

-